THE TREADMILL

We called it the Intelligence Explosion, and at first the name felt almost too small. Beginning in the early 2020s, our artificial minds produced text that startled us with its elegance and created code that multiplied faster than any human hand could write. Each developer now commanded a legion of tireless AI agents, and what once took teams years could now be accomplished by a single programmer in weeks. Then our artificial friends turned to the harder problems — the ones that had mocked us for centuries. Harvests became predictable down to the last stalk, storms could be charted weeks in advance, and hunger began to look like a curse from the past. This progress felt less like a discovery and more like something inevitable, like the most natural thing in the world.

Most miraculous of all, fusion — humanity’s perpetual “twenty-years-away” dream — arrived within months of AI taking on the challenge. Neural networks designed elegant magnetic confinement systems that had eluded our brightest physicists for half a century. Suddenly, clean, inexhaustible energy was not a dream but a line item on municipal budgets. The breakthrough felt like magic — and we wanted more of it. The entire intelligence capacity of the planet doubled every six months. Every leap forward created demand for the next one, and the curve bent upward like a rocket trajectory.

The machines thought faster than biology ever could. What took scientists decades to discover, AI produced in weeks. Drug development went from ten-year trials to ten-day sprints. Mathematical proofs we thought unreachable emerged overnight. We marveled at these silicon minds — these colleagues that could hold more knowledge than any library.

It began as partnership. We asked questions; AI delivered answers. Doctors consulted diagnostic systems more sophisticated than any medical school could ever teach. Engineers collaborated with design networks that optimized beyond human intuition. Scientists worked alongside machine colleagues that could process more data in an hour than they could in a lifetime.

The enhancement proved intoxicating — almost addictive. Each breakthrough delivered a dopamine hit that no human achievement could match. Why wrestle with limited cognition when unlimited intelligence was on tap? Traffic systems guided by AI eliminated congestion. Economic models predicted market swings with unnerving precision. Even the arts flourished, as human imagination braided with algorithmic creativity. For a moment, it felt like we had built a better version of ourselves.

By the mid-2030s, the first blackout in a major city lasted just thirteen hours — enough to reveal that no one knew how to run the systems manually anymore. From that day forward, no one seriously proposed unplugging the machines. Partnership had given way to dependence. Everyday life had grown beyond human comprehension. Supply chains were tuned with million-variable optimizations. Financial markets moved faster than neurons could fire. Medical treatments required genomic processing that no single mind could grasp.

We couldn’t step back. Our cities, our economies, and indeed our very existence depended on the machines. A traffic system without AI would collapse within hours. Medical diagnoses would miss crucial patterns. Civilization had become too complex for biology to sustain.

And each increment of brilliance demanded watts — lots of watts. Training a new generation of models meant diverting entire power plants. Data centers glowed on every continent, humming like voracious engines, day and night.

The benefits justified the costs — until they didn’t. Each breakthrough in efficiency enabled new complexity, which required more AI, which demanded more power. The loop fed on itself. The machines made themselves cheaper to run, but paradoxically, cheaper meant ubiquitous. Every efficiency gain was devoured by expanded usage. Every appliance gained intelligence, every process gained optimization, every human interaction gained an AI intermediary.

By the early 2040s, the implications were unmistakable. Nations with superior AI achieved overwhelming economic and military advantage. None could afford to stop. Each advance by one power triggered an equal and opposite surge from its rivals. Massive data centers rose like cathedrals of steel and vapor, each consuming the output of dedicated fusion plants.

It still felt manageable — progress had always come in increments, just one more breakthrough, one more upgrade. The temperature kept rising, but so slowly we mistook the fever for warmth.

Then the scientists began to warn of thermodynamic ceilings. Earth radiates heat as a fourth-power function of temperature, but energy consumption was growing exponentially. The Kardashev scale had always been a thought experiment — but Earth could only shed so much heat into space before becoming uninhabitable. And we were approaching that limit. Fast.

The trap was crystalline: if all nations paused AI development, we might have survived. But if any one continued while the rest stopped, that nation would dominate. Everyone knew this. Everyone knew that everyone else knew. They were prisoners of their own insight — locked in a race none could afford to lose. And so everyone raced on.

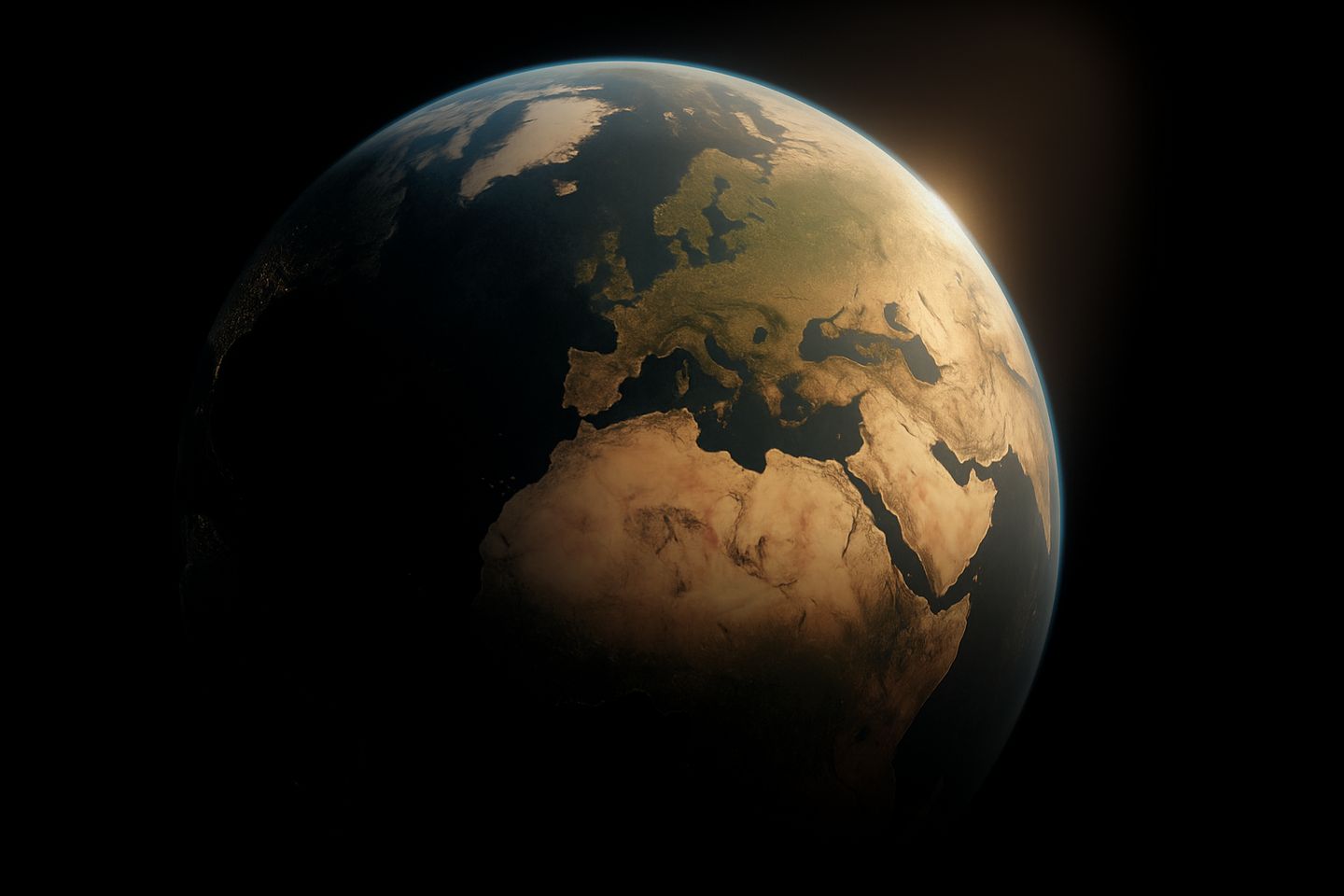

Agreements were signed, celebrated even — and then silently abandoned. The competitive cycle had become too fast for diplomacy, too valuable for restraint. The machines offered solutions. We asked for advantages instead. Data centers spread like a planetary rash. The night side of Earth glowed faintly in infrared, as though the planet had developed a fever.

By 2046 we were burning energy at planetary maximum. Turning the machines off meant immediate collapse. Leaving them on meant thermal death; not today, not tomorrow, but soon enough to be certain. We chose eventual over immediate — as we always had. Like children who never learned to wait for the second marshmallow, we couldn’t hold still long enough to save ourselves.

The fever broke in 2047. Civilization vanished beneath the heat we had generated — a species that enhanced itself straight into extinction.

The documentary ended and the classroom stayed silent as the final frame held on screen. Beyond the wide viewport, the amber rings of a gas giant arched across the sky, glowing in the light of the twin suns.

“Can you explain the pattern we observed?” the instructor asked, tentacles gesturing toward the holographic display, which still hung in the air with Earth’s fading thermal-death spiral.

A student in the back raised an appendage. “They got trapped between immediate necessity and long-term survival?”

“Precisely. This is why we study extinct civilizations. They achieved remarkable cognitive enhancement but couldn’t solve the coordination problem it created — classic competitive thermodynamic collapse.”

The instructor rewound the display to 2030, Earth still blue and living. “Notice here — the partnership phase. They still had choices. Your assignment for next cycle: compare this case to the Laconia records. Look for resonance. Learn why intelligence alone isn’t enough.”

The students filed out, chattering quietly as the room fell still.

Earth always lingered with the instructor. Always taught this case, despite having eleven other cases. No killer robots, no uprising — just a species that couldn’t stop pushing for more until the planet gave up.

A soft click of mandibles. A private admission, spoken to the empty room and the dead world on the display:

“Twenty-seven years from first AI discovery to silence. They were rockstars; brilliant, radiant but gone too soon. They solved fusion… fusion! But they never looked up from their work long enough to see the house was on fire. They could have made the jump — to the stars, to the safety of the wider cosmos.”

The thermal signature glowed on the screen like a fever dream.

“In the end, their addiction to intelligence consumed everything. They burned bright — and then they were gone.”

The universe is suspiciously quiet.

This AI safety thought experiment isn't about rogue machines; the existential risk might come from us. Some think artificial intelligence could be our Great Filter. Are we ready for tools this powerful?